What happens when you feel unconditional love for your cloud provider? You trust your cloud services too much. It leads to mistakes, and the most critical blunders are pricing and security. One would say it’s only one - pricing :)

Eventually, you miss your success criteria and budget estimates just like Apollo 13 missed the Moon.

I have no doubts you will agree with me - we don’t want to be anywhere close if that ever happens. Anything that can go wrong will go wrong.

Here is one case study about my two pricing mistakes that had happened in Q4 2019. I am sharing the details with you, as I hope you can avoid my mistakes.

By the way, even though my examples are about specific Azure services, you can make similar mistakes with other offerings / other cloud providers.

To efficiently use cloud services, you need to develop extra caution.

Here’s how to waste 1225 Eur on Databricks

First mistake is related to Databricks. Remember - storage is cheap, computation is expensive.

The workhorse for data engineering and data science in Azure is Databricks. The price is primarily based on clusters up-time.

When I calculate the price for Databricks, I try to figure out things like number of users, data amounts and complexity, work hours. And based on work hours, I can make initial assumptions how long the interactive clusters might run.

And here’s the magic (especially relevant for non-regular working hours): admins don’t need terminate the clusters at an agreed time. Instead, Databricks has an auto shutdown functionality.

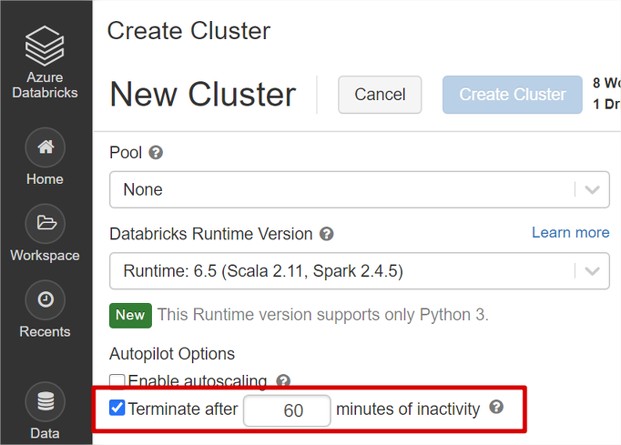

During Databricks cluster creation, there is a simple checkbox, visible in the image below.

When enabled, the cluster will terminate after the specified time interval of inactivity.

Inactivity? Great, that means the cluster will not run when there are no users and I will not pay for it.

Not exactly!

Databricks clusters terminate if there are no running commands or active job runs. It doesn’t matter if users are logged in or not.

What if there is a bug and the task got stuck running? What if someone run and forgot about streaming listener? What if someone executed “while True:” loop?

I understand the functionality, as you can’t just terminate a cluster once it’s running a piece of code.

But sometimes, your cluster might keep running because of some rubbish code that your team member executed a while ago.

Lesson #1: Don’t trust auto-terminate blindly. Terminate manually if you don’t use your cluster.

Here’s how to burn over 3600 Eur with Azure Synapse Pools (SQL Data Warehouse)

The second blunder - a classical start and forget scenario.

We had a small workshop with demanding business users. They regularly escalate even smallest issues to the C level. We call them “client’s from hell”.

I trust you can find similar users within your organization :)

Our “client’s from hell” are advanced data analysts, that specialize in SQL. They want to crunch dozens of terabytes.

After some planning, we decided to organize a small workshop and let them play with SQL Data Warehouse (Azure Synapse SQL Pools).

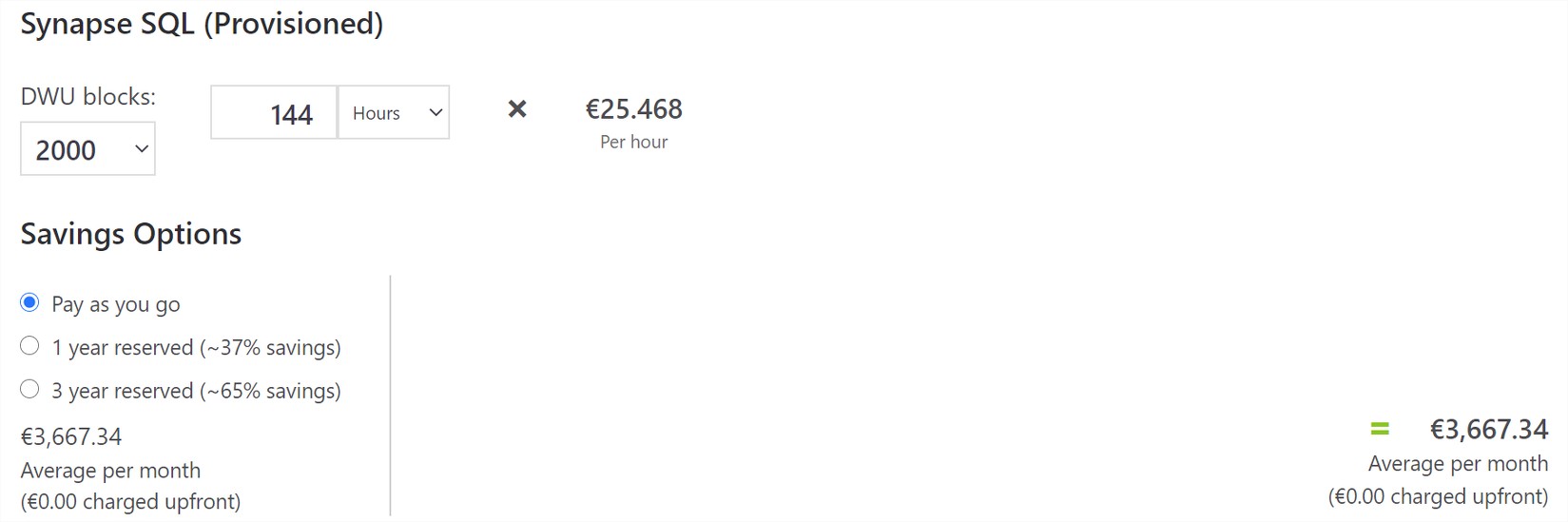

Initially, our team thought that DWU 500 might be enough. But one engineer wanted to impress our users and increased it to DWU 2000.

The workshop was a huge success!

We received positive feedback, we felt like heroes. And we hit a bar, of course.

What about Azure Synapse SQL Pool?

We remembered about it a week later. It run for nearly 144 hours, instead of 8.

Luckily, the client’s enjoyed the workshop and new possibilities, didn’t mind the increased cost it too much.

Lesson #2: There are a few very expensive services. Azure Synapse SQL Pools is one of them. Use it with caution!

To sum up, don’t trust yourself, your team and your cloud provider blindly. Trust, but check.

Set up pricing alerts (I wrote a blog post about it). However alerts are reactive and you get notified once something is wrong. It might be too late.

Secondly, use all services with caution. Set up reminders in your Outlook to verify usage from time to time. Double check your automation scripts. Encourage your team to shut down resources and don’t rely on auto-shutdown functionalities blindly.

I recorded a short video about it also. Check it out.